- Karol Sobota

- Read in 9 min.

Oftentimes, when we get down to work on a new project, from the very beginning we really want to stick to best practises and rules, which we developed under most recent project. One of them could be test driven development (TDD) and other mass test writing. Following this rule, we truly believe, that most of the errors can be eliminated as early as the code-writing process, and as a consequence, we won’t have to waste our time adding corrections to what we’d written.

Full of optimism, we get down to code writing – we write tests, which are a pure reflection of how a system should function properly and we add business logic, which interacts with all tests of our own. Ready, steady, green light – that’s the sign to upload our code to the repository. As soon as possible we implement very first version of the app into the developer environment.

The app seems to be working fine, but we want to give just a last check to the effects of our work – we call the endpoint of newly added service, and instead of getting:

„Hello World”

as response we get the following:

„500 – Internal Server Error”

… and we’ve come to an end of our short journey of optimism

How’s that even possible, you may ask yourselves… What’s the point of this whole test-writing, since we still have to deal with almost everyting in the environment anyway.

One of the possible causes of this problem can be skipping in test operation, that is performed on a database or performing these operations on a base, other than that, which is available in the target environment.

As a solution to this problem, we’d be really happy to show you how to write integration tests in easy and transparent way.

Ways of testing database queries

One of the most common approaches is to use a RAM database such as H2 or Fongo (Fake MongoDB). This seems to be a really good solution, because we don’t have to worry about the manual installation and database management as a dedicated application itself. All we need to do is to just configure our tests. Shortly after running them, the database will be automatically launched.

However, this solution has one serious drawback:

- Databases of this type do not provide the same functions as the real database. That may lead to a situation, in which we change the syntax of a given query, in such a way, that it works both on the test and production base, losing its readability or performance.

Another approach, is to set up a dedicated instance of the same base as in the production environment. Additionally, to eliminate differences in the operation of such a base in the production environment, and on our computer, it can be launched in a docker container, where we can provide identical runtime conditions.

Drawbacks of the second approach:

- first you have to prepare appropriate database configuration;

- it requires to launch the instance manually, before running the tests.

Third approach, at the same time the one we’re going to get a better insight of, is the use of testcontainers library. The library enables to automate the process of launching a specific database within a docker container. All it requires is just a simple configuration.

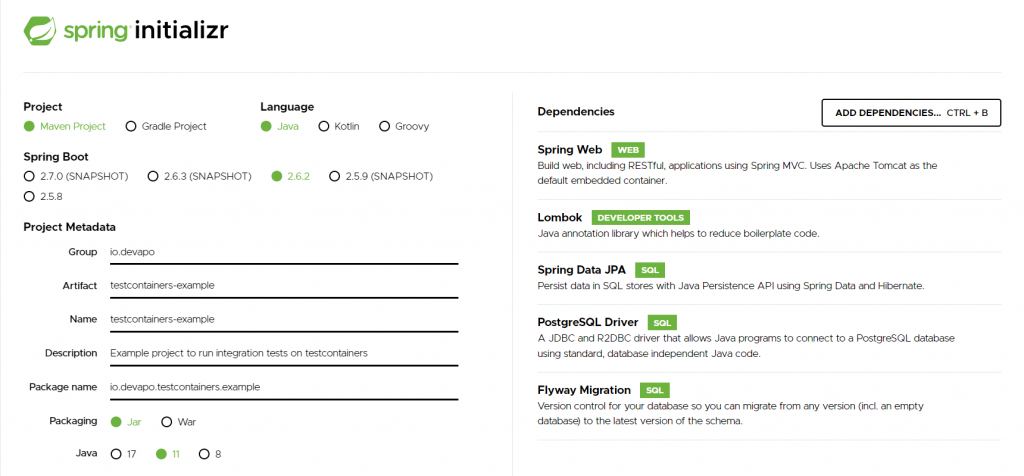

Let’s use Spring Boot to create an app as an example

To create our sample application quickly and easily, we are going to use the spring initializer tool available at: https://start.spring.io/

For our project we are going to use several libraries, that will allow us to write fully functional integration tests. That’s why when creating the project, we will add dependencies such as:

- spring web – this dependency will turn our application into a web application – it will be launched at a specific address and port, and will listen for requests from the user,

- lombok – this dependency will allow us to write the code necessary for this example faster,

- spring data jpa – will allow us to present the database model in the form of java language code,

- flyway migration – this one, however, will allow us to write simple scripts that will initialize our database (by creating a table),

- postgresql – and the last one but not least, will allow communication with the database – in this case – postgres

The configuration of our application, which we will generate, should eventually look like this:

We create several directories and files in our newly generated project, so the structure looks exactly as shown in the screen below:

Careful here! Form the “db/migration” directory by creating the db directory and then the migration directory inside it. IntelliJ will display this directory as “db.migration”, which would be the same after creating one directory with “db.migration” name specified. This can be really confusing and eventually lead to a problem, that is hard to locate.

Below, you can see the content of all files from the image above. The content is at the same time the source code of our sample application, that allows you to save Post data (e.g. on a forum) in the database. In a real case, the code would perform additional operations, such as data validation, saving the history of operations, or error handling, but in this case these operations were omitted because the save operation itself is sufficient to write an integration test.

Post.java file

package io.devapo.testcontainers.example.model;

import com.sun.istack.NotNull;

import lombok.AllArgsConstructor;

import lombok.Builder;

import lombok.Getter;

import lombok.NoArgsConstructor;

import lombok.Setter;

import javax.persistence.Column;

import javax.persistence.Entity;

import javax.persistence.GeneratedValue;

import javax.persistence.Id;

import java.util.UUID;

@Builder

@Getter

@Setter

@AllArgsConstructor

@NoArgsConstructor

@Entity

public class Post {

@Id

@GeneratedValue

@Column(columnDefinition = "uuid", updatable = false)

private UUID id;

@NotNull

private String content;

}

PostController.java file

package io.devapo.testcontainers.example.controller;

import io.devapo.testcontainers.example.model.Post;

import io.devapo.testcontainers.example.service.PostService;

import lombok.RequiredArgsConstructor;

import org.springframework.web.bind.annotation.GetMapping;

import org.springframework.web.bind.annotation.PostMapping;

import org.springframework.web.bind.annotation.RequestBody;

import org.springframework.web.bind.annotation.RequestMapping;

import org.springframework.web.bind.annotation.RestController;

import java.util.List;

@RequestMapping(value = "/posts")

@RestController

@RequiredArgsConstructor

public class PostController {

private final PostService postService;

@PostMapping

public Post create(@RequestBody String content) {

return postService.create(content);

}

@GetMapping

public List<Post> findAll() {

return postService.findAll();

}

}

PostService.java file

package io.devapo.testcontainers.example.service;

import com.sun.istack.NotNull;

import io.devapo.testcontainers.example.model.Post;

import io.devapo.testcontainers.example.repository.PostRepository;

import lombok.RequiredArgsConstructor;

import org.springframework.stereotype.Service;

import javax.transaction.Transactional;

import java.util.List;

@Transactional

@Service

@RequiredArgsConstructor

public class PostService {

private final PostRepository postRepository;

public Post create(@NotNull String content) {

Post post = Post.builder().content(content).build();

post = postRepository.saveAndFlush(post);

return post;

}

public List<Post> findAll() {

return postRepository.findAll();

}

}

PostRepository.java file

package io.devapo.testcontainers.example.repository;

import io.devapo.testcontainers.example.model.Post;

import org.springframework.data.jpa.repository.JpaRepository;

import org.springframework.stereotype.Repository;

import java.util.UUID;

@Repository

public interface PostRepository extends JpaRepository<Post, UUID> {

}

V01__init.sql file

CREATE TABLE post (

id UUID NOT NULL PRIMARY KEY,

content TEXT NOT NULL

)

Testcontainers library configuration

As part of this project, we will write tests using the spock library and the groovy language. Therefore, in the pom.xml file, which is available in the main application directory, we need to add the following dependencies:

<dependency>

<groupId>org.spockframework</groupId>

<artifactId>spock-core</artifactId>

<version>${spock.version}</version>

<scope>test</scope>

</dependency>

<dependency>

<groupId>org.spockframework</groupId>

<artifactId>spock-spring</artifactId>

<version>${spock.version}</version>

<scope>test</scope>

</dependency>The newest version of those libraries can be found here.

After adding the dependencies, we need to create some files so that the src/test directory looks like following:

Careful now vol. 2!: we create files in the src / test / groovy directory by right-clicking on this directory, and then selecting the new -> Groovy Class option, and not through the new -> Java Class option

Content of files:

BaselITSpecification.groovy file – the file contains source code of our first integration test

package io.devapo.testcontainers.example

import org.springframework.boot.test.context.SpringBootTest

import org.springframework.test.context.ActiveProfiles

import spock.lang.Specification

import static org.springframework.boot.test.context.SpringBootTest.WebEnvironment.RANDOM_PORT

@SpringBootTest(webEnvironment = RANDOM_PORT)

@ActiveProfiles("test")

class BaseITSpecification extends Specification {

def "should successfully load context"() {

expect:

true

}

}

Quick check of instructions from the file and what each of them actually does:

extends Specification – inheriting from this certain class cause, that given class and methods inside it can be performed by the test engine on the JUnit platform. Additionally, this class provides with tools that facilitate test writing.

@SpringBootTest(webEnvironment = RANDOM_PORT) – by adding this annotation over any test class, spring boot context starts as soon as the test is launched. As part of this operation, a connection to the database is initiated. Annotation enables our tests to become integrative. If you still decide to leave this annotation aside, it doesn’t mean that you can’t write unit test.

@ActiveProfiles(„test”) – Here, we’re defining what profile we want to use. In this case, we will use a test profile, which means that the settings from the application-test.properties file (described below will be applied).

def “should successfully load context”() { expect: true } – This block presents a very simple integration test. We use it to check if spring boot context has been properly launched. This test does not need to contain more statements than expect: true, because if the context launch fails, the test will be aborted.

Let’s talk about application-test.properties file – within this file, the parameters of the application that are launched in the test mode are defined.

spring.datasource.url=jdbc:tc:postgresql:14.1://localhost:10000/test-database spring.datasource.username=aaa spring.datasource.password= spring.datasource.driverClassName=org.testcontainers.jdbc.ContainerDatabaseDriver spring.jpa.properties.hibernate.dialect=org.hibernate.dialect.PostgreSQL95Dialect spring.jpa.generate-ddl=true spring.jpa.hibernate.ddl-auto=validate

Let’s pause here again, to explain what each statement of this file actually does:

- Statements that begin with spring.datasource.

Here you have specified all parameters of the database to which is going to be connected when launching spring boot context. Since the testcontainers library will automatically take care of creating database in the container, then data as: database name, user, password or port could be edited any time in any way. It is very important that the url parameter value remains in the form of a jdbc connection. Also keep in mind that the driverClassName parameter value is set to:

org.testcontainers.jdbc.ContainerDatabaseDriver.

Here, at this very place, we can also set the database, which we will start manually.

- Statements that begin with spring.jpa.

These are parameters that allow you to verify the consistency of entity classes (in our case Post.java) with the database structure.

Before running the test, we need to add dependencies to the testcontainers library in the root directory of the pom.xml file, which is responsible for launching and managing the test database.

<dependency>

<groupId>org.testcontainers</groupId>

<artifactId>testcontainers</artifactId>

<version>${testcontainers.version}</version>

<scope>test</scope>

</dependency>

<dependency>

<groupId>org.testcontainers</groupId>

<artifactId>spock</artifactId>

<version>${testcontainers.version}</version>

<scope>test</scope>

</dependency>

<dependency>

<groupId>org.testcontainers</groupId>

<artifactId>postgresql</artifactId>

<version>${testcontainers.version}</version>

<scope>test</scope>

</dependency>The newest version of those libraries can be found here.

Moreover, before running the test, we need to locally launch the docker environment. Unless we do this, shortly after running the test “should successfully load context” from the BaseITSpecification file, we will immediately get an exception as following:

Caused by: java.lang.IllegalStateException: Could not find a valid Docker environment. Please see logs and check configuration.

If you use Windows system, to run docker, all you need to do is start the docker desktop application.

Careful again! In some cases, it may be necessary to select the option: “Expose daemon on tcp://localhost:2375 without TLS”, which is available in the docker desktop application settings.

In case you use Linux system, all you have to do is launch the docker app by using the following command:

sudo service docker start

If we haven’t configured docker before, and the test ends up with an occurring failure notification „access denied”, you may find very helpful, to use the command from below:

sudo chmod 666 /var/run/docker.sock

As long as launch the docker correctly, restarting the test should be successful, and we should see a similar set of information in the console, like below:

2022-01-07 00:02:28.065 INFO 8988 --- [ main] o.t.d.DockerClientProviderStrategy : Loaded org.testcontainers.dockerclient.NpipeSocketClientProviderStrategy from ~/.testcontainers.properties, will try it first

2022-01-07 00:02:28.350 INFO 8988 --- [ main] o.t.d.DockerClientProviderStrategy : Found Docker environment with local Npipe socket (npipe:////./pipe/docker_engine)

2022-01-07 00:02:28.351 INFO 8988 --- [ main] org.testcontainers.DockerClientFactory : Docker host IP address is localhost

2022-01-07 00:02:28.375 INFO 8988 --- [ main] org.testcontainers.DockerClientFactory : Connected to docker:

Server Version: 20.10.11

API Version: 1.41

Operating System: Docker Desktop

Total Memory: 22329 MB

2022-01-07 00:02:28.377 INFO 8988 --- [ main] o.t.utility.ImageNameSubstitutor : Image name substitution will be performed by: DefaultImageNameSubstitutor (composite of 'ConfigurationFileImageNameSubstitutor' and 'PrefixingImageNameSubstitutor')

2022-01-07 00:02:28.564 INFO 8988 --- [ main] o.t.utility.RegistryAuthLocator : Credential helper/store (docker-credential-desktop) does not have credentials for index.docker.io

2022-01-07 00:02:30.941 INFO 8988 --- [eam--1007472360] org.testcontainers.DockerClientFactory : Starting to pull image

2022-01-07 00:02:30.959 INFO 8988 --- [eam--1007472360] org.testcontainers.DockerClientFactory : Pulling image layers: 0 pending, 0 downloaded, 0 extracted, (0 bytes/0 bytes)

2022-01-07 00:02:32.170 INFO 8988 --- [eam--1007472360] org.testcontainers.DockerClientFactory : Pulling image layers: 2 pending, 1 downloaded, 0 extracted, (327 KB/? MB)

2022-01-07 00:02:32.323 INFO 8988 --- [eam--1007472360] org.testcontainers.DockerClientFactory : Pulling image layers: 1 pending, 2 downloaded, 0 extracted, (2 MB/? MB)

2022-01-07 00:02:32.437 INFO 8988 --- [eam--1007472360] org.testcontainers.DockerClientFactory : Pulling image layers: 1 pending, 2 downloaded, 1 extracted, (3 MB/? MB)

2022-01-07 00:02:32.495 INFO 8988 --- [eam--1007472360] org.testcontainers.DockerClientFactory : Pulling image layers: 1 pending, 2 downloaded, 2 extracted, (3 MB/? MB)

2022-01-07 00:02:32.586 INFO 8988 --- [eam--1007472360] org.testcontainers.DockerClientFactory : Pulling image layers: 1 pending, 2 downloaded, 3 extracted, (5 MB/? MB)

2022-01-07 00:02:32.598 INFO 8988 --- [eam--1007472360] org.testcontainers.DockerClientFactory : Pull complete. 3 layers, pulled in 1s (downloaded 5 MB at 5 MB/s)

2022-01-07 00:02:34.212 INFO 8988 --- [ main] org.testcontainers.DockerClientFactory : Ryuk started - will monitor and terminate Testcontainers containers on JVM exit

2022-01-07 00:02:34.212 INFO 8988 --- [ main] org.testcontainers.DockerClientFactory : Checking the system...

2022-01-07 00:02:34.212 INFO 8988 --- [ main] org.testcontainers.DockerClientFactory : ✔︎ Docker server version should be at least 1.6.0

2022-01-07 00:02:34.382 INFO 8988 --- [ main] org.testcontainers.DockerClientFactory : ✔︎ Docker environment should have more than 2GB free disk space

2022-01-07 00:02:34.392 INFO 8988 --- [ main] 🐳 [postgres:14.1] : Pulling docker image: postgres:14.1. Please be patient; this may take some time but only needs to be done once.

2022-01-07 00:02:36.820 INFO 8988 --- [tream--48709195] 🐳 [postgres:14.1] : Starting to pull image

2022-01-07 00:02:36.821 INFO 8988 --- [tream--48709195] 🐳 [postgres:14.1] : Pulling image layers: 0 pending, 0 downloaded, 0 extracted, (0 bytes/0 bytes)

2022-01-07 00:02:38.086 INFO 8988 --- [tream--48709195] 🐳 [postgres:14.1] : Pulling image layers: 12 pending, 1 downloaded, 0 extracted, (320 KB/? MB)

2022-01-07 00:02:38.428 INFO 8988 --- [tream--48709195] 🐳 [postgres:14.1] : Pulling image layers: 11 pending, 2 downloaded, 0 extracted, (8 MB/? MB)

2022-01-07 00:02:38.865 INFO 8988 --- [tream--48709195] 🐳 [postgres:14.1] : Pulling image layers: 10 pending, 3 downloaded, 0 extracted, (21 MB/? MB)

2022-01-07 00:02:39.579 INFO 8988 --- [tream--48709195] 🐳 [postgres:14.1] : Pulling image layers: 9 pending, 4 downloaded, 0 extracted, (40 MB/? MB)

2022-01-07 00:02:39.581 INFO 8988 --- [tream--48709195] 🐳 [postgres:14.1] : Pulling image layers: 8 pending, 5 downloaded, 0 extracted, (40 MB/? MB)

2022-01-07 00:02:39.601 INFO 8988 --- [tream--48709195] 🐳 [postgres:14.1] : Pulling image layers: 7 pending, 6 downloaded, 0 extracted, (41 MB/? MB)

2022-01-07 00:02:40.351 INFO 8988 --- [tream--48709195] 🐳 [postgres:14.1] : Pulling image layers: 6 pending, 7 downloaded, 0 extracted, (41 MB/? MB)

2022-01-07 00:02:40.727 INFO 8988 --- [tream--48709195] 🐳 [postgres:14.1] : Pulling image layers: 6 pending, 7 downloaded, 1 extracted, (51 MB/? MB)

2022-01-07 00:02:40.945 INFO 8988 --- [tream--48709195] 🐳 [postgres:14.1] : Pulling image layers: 6 pending, 7 downloaded, 2 extracted, (57 MB/? MB)

2022-01-07 00:02:41.003 INFO 8988 --- [tream--48709195] 🐳 [postgres:14.1] : Pulling image layers: 6 pending, 7 downloaded, 3 extracted, (57 MB/? MB)

2022-01-07 00:02:41.041 INFO 8988 --- [tream--48709195] 🐳 [postgres:14.1] : Pulling image layers: 5 pending, 8 downloaded, 3 extracted, (60 MB/? MB)

2022-01-07 00:02:41.059 INFO 8988 --- [tream--48709195] 🐳 [postgres:14.1] : Pulling image layers: 4 pending, 9 downloaded, 3 extracted, (60 MB/? MB)

2022-01-07 00:02:41.109 INFO 8988 --- [tream--48709195] 🐳 [postgres:14.1] : Pulling image layers: 4 pending, 9 downloaded, 4 extracted, (60 MB/? MB)

2022-01-07 00:02:41.508 INFO 8988 --- [tream--48709195] 🐳 [postgres:14.1] : Pulling image layers: 4 pending, 9 downloaded, 5 extracted, (72 MB/? MB)

2022-01-07 00:02:41.593 INFO 8988 --- [tream--48709195] 🐳 [postgres:14.1] : Pulling image layers: 4 pending, 9 downloaded, 6 extracted, (75 MB/? MB)

2022-01-07 00:02:41.646 INFO 8988 --- [tream--48709195] 🐳 [postgres:14.1] : Pulling image layers: 4 pending, 9 downloaded, 7 extracted, (75 MB/? MB)

2022-01-07 00:02:41.695 INFO 8988 --- [tream--48709195] 🐳 [postgres:14.1] : Pulling image layers: 4 pending, 9 downloaded, 8 extracted, (75 MB/? MB)

2022-01-07 00:02:41.869 INFO 8988 --- [tream--48709195] 🐳 [postgres:14.1] : Pulling image layers: 3 pending, 10 downloaded, 8 extracted, (81 MB/? MB)

2022-01-07 00:02:41.935 INFO 8988 --- [tream--48709195] 🐳 [postgres:14.1] : Pulling image layers: 2 pending, 11 downloaded, 8 extracted, (85 MB/? MB)

2022-01-07 00:02:43.274 INFO 8988 --- [tream--48709195] 🐳 [postgres:14.1] : Pulling image layers: 1 pending, 12 downloaded, 8 extracted, (128 MB/? MB)

2022-01-07 00:02:45.808 INFO 8988 --- [tream--48709195] 🐳 [postgres:14.1] : Pulling image layers: 1 pending, 12 downloaded, 9 extracted, (130 MB/? MB)

2022-01-07 00:02:45.844 INFO 8988 --- [tream--48709195] 🐳 [postgres:14.1] : Pulling image layers: 1 pending, 12 downloaded, 10 extracted, (130 MB/? MB)

2022-01-07 00:02:45.877 INFO 8988 --- [tream--48709195] 🐳 [postgres:14.1] : Pulling image layers: 1 pending, 12 downloaded, 11 extracted, (130 MB/? MB)

2022-01-07 00:02:45.910 INFO 8988 --- [tream--48709195] 🐳 [postgres:14.1] : Pulling image layers: 1 pending, 12 downloaded, 12 extracted, (130 MB/? MB)

2022-01-07 00:02:45.944 INFO 8988 --- [tream--48709195] 🐳 [postgres:14.1] : Pulling image layers: 1 pending, 12 downloaded, 13 extracted, (130 MB/? MB)

2022-01-07 00:02:45.955 INFO 8988 --- [tream--48709195] 🐳 [postgres:14.1] : Pull complete. 13 layers, pulled in 9s (downloaded 130 MB at 14 MB/s)

2022-01-07 00:02:45.965 INFO 8988 --- [ main] 🐳 [postgres:14.1] : Creating container for image: postgres:14.1

2022-01-07 00:02:46.280 INFO 8988 --- [ main] 🐳 [postgres:14.1] : Starting container with ID: fc6f01ce9ee627f86740f55370746b2ea2464e6b93314c1e98df85276314adb4

2022-01-07 00:02:46.803 INFO 8988 --- [ main] 🐳 [postgres:14.1] : Container postgres:14.1 is starting: fc6f01ce9ee627f86740f55370746b2ea2464e6b93314c1e98df85276314adb4

2022-01-07 00:02:47.565 INFO 8988 --- [ main] 🐳 [postgres:14.1] : Container postgres:14.1 started in PT13.1823083S

As we can see, docker images were downloaded as a part of the first launch. Depending on the data throughput, the test could take a bit longer.

Due to the fact that now we have this image locally, then within the next test runs, the image download step is skipped. We can see such behavior in the snippet of logs from the console:

2022-01-07 00:06:39.508 INFO 12412 --- [ main] o.t.d.DockerClientProviderStrategy : Loaded org.testcontainers.dockerclient.NpipeSocketClientProviderStrategy from ~/.testcontainers.properties, will try it first

2022-01-07 00:06:39.804 INFO 12412 --- [ main] o.t.d.DockerClientProviderStrategy : Found Docker environment with local Npipe socket (npipe:////./pipe/docker_engine)

2022-01-07 00:06:39.805 INFO 12412 --- [ main] org.testcontainers.DockerClientFactory : Docker host IP address is localhost

2022-01-07 00:06:39.831 INFO 12412 --- [ main] org.testcontainers.DockerClientFactory : Connected to docker:

Server Version: 20.10.11

API Version: 1.41

Operating System: Docker Desktop

Total Memory: 22329 MB

2022-01-07 00:06:39.834 INFO 12412 --- [ main] o.t.utility.ImageNameSubstitutor : Image name substitution will be performed by: DefaultImageNameSubstitutor (composite of 'ConfigurationFileImageNameSubstitutor' and 'PrefixingImageNameSubstitutor')

2022-01-07 00:06:40.041 INFO 12412 --- [ main] o.t.utility.RegistryAuthLocator : Credential helper/store (docker-credential-desktop) does not have credentials for index.docker.io

2022-01-07 00:06:44.399 INFO 12412 --- [ main] org.testcontainers.DockerClientFactory : Ryuk started - will monitor and terminate Testcontainers containers on JVM exit

2022-01-07 00:06:44.399 INFO 12412 --- [ main] org.testcontainers.DockerClientFactory : Checking the system...

2022-01-07 00:06:44.399 INFO 12412 --- [ main] org.testcontainers.DockerClientFactory : ✔︎ Docker server version should be at least 1.6.0

2022-01-07 00:06:44.522 INFO 12412 --- [ main] org.testcontainers.DockerClientFactory : ✔︎ Docker environment should have more than 2GB free disk space

2022-01-07 00:06:44.531 INFO 12412 --- [ main] 🐳 [postgres:14.1] : Creating container for image: postgres:14.1

2022-01-07 00:06:44.580 INFO 12412 --- [ main] 🐳 [postgres:14.1] : Starting container with ID: beb7990866abae47a18f2e7ed485e1c8ee8d7f5d515570a82155496eddc49efe

2022-01-07 00:06:45.137 INFO 12412 --- [ main] 🐳 [postgres:14.1] : Container postgres:14.1 is starting: beb7990866abae47a18f2e7ed485e1c8ee8d7f5d515570a82155496eddc49efe

2022-01-07 00:06:45.891 INFO 12412 --- [ main] 🐳 [postgres:14.1] : Container postgres:14.1 started in PT1.3679645S

The logs also contain the information about the total duration of the test, under which we launched postgres container and spring boot context. The test lasted exactly 10,481 sec.

00:06:37.708

…

00:06:48.189

During the test, we may see in the docker, that two containers have been launched:

One of them is postgres – our database. What about the other container called ”ryuk”?

It is a container that is automatically run by the testcontainers library, and its responsible for automatic cleaning of the database container (in this case, postgres) if either the test runs or fails.

You may call it some sort of a garbage collector, who makes sure that we are not flooded with containers after a series of tests.

Testing our performance on a database

Since we know now, that the container and spring boot context run correctly in the tests, we can finally move on to writing our first integration test.

We start by adding PostSpecIt.groovy file to the src/test/groovy/io/devapo/testcontainers/example directory, which content should present as following:

package io.devapo.testcontainers.example

import io.devapo.testcontainers.example.model.Post

import io.devapo.testcontainers.example.repository.PostRepository

import org.springframework.beans.factory.annotation.Autowired

import org.springframework.boot.test.web.client.TestRestTemplate

import org.springframework.core.ParameterizedTypeReference

import org.springframework.http.HttpMethod

import org.springframework.http.ResponseEntity

class PostSpecIT extends BaseITSpecification {

private static String BASE_URL = "/posts"

@Autowired

TestRestTemplate restTemplate

@Autowired

PostRepository postRepository

def setup() {

postRepository.deleteAll()

}

def "should create post"() {

given:

String content = "some text"

when:

ResponseEntity<Post> response = restTemplate.postForEntity(BASE_URL, content, Post.class)

then:

response.body.content == content

postRepository.findAll().first().content == content

}

}

We added inheritance from the class we wrote earlier, which was BaseITSpecification, which eventually is included in the class from above. That’s why, we’re now able to use all the features of this class. We will be able to run tests using the JUnit engine, and as part of these tests, the spring boot context will be raised.

What’s more, objects of two classes were injected within the class: TestRestTemplate and PostRepository. The first one allows us to make REST requests as part of our tests, which, in turn, will allow us to call the post creation endpoint. The second object, however, allows us to perform operations directly on the database.

As part of the should create post test, we checked that both the object that was returned from the REST request and the object that was actually saved in the database, include content transferred as input data. Finally, let’s now compare this test to the unit test.

For this, we need to create a PostSpec.groovy file in the same directory, which content is shown below:

package io.devapo.testcontainers.example

import io.devapo.testcontainers.example.model.Post

import io.devapo.testcontainers.example.repository.PostRepository

import io.devapo.testcontainers.example.service.PostService

import spock.lang.Specification

class PostSpec extends Specification {

private final PostRepository postRepository = Mock()

private PostService postService

def setup() {

postService = new PostService(postRepository)

}

def "should create post"() {

given:

String content = "some text"

when:

Post post = postService.create(content)

then:

post.content == content

1 * postRepository.saveAndFlush(_ as Post) >> { Post p -> p }

}

}

The inheritance was set directly after the Specification class, and not as previous BaseITSpecification class, which means that the tests in this class will not run, either spring boot context or the database.

Therefore, the operation of writing to the database had to be simulated. The fragment below corresponds to cases of the test “should create post”:

1 * postRepository.saveAndFlush(_ as Post) >> { Post p -> p }

You may think that the write operation is so trivial that nothing bad can occur, so why waste time on integration testing? Now it’s high time we confronted it with some praxis.

To make that happen, we have to get back to the V01__init.sql which we created before, and change the content field type from TEXT to VARCHAR(5).

CREATE TABLE post (

id UUID NOT NULL PRIMARY KEY,

content VARCHAR(5) NOT NULL

);

Next, let’s try to run the unit test – it still succeeds.

But when we restart the integration test, we will see exception as presented below:

org.postgresql.util.PSQLException: ERROR: value too long for type character varying(5)

This error is just a trivial one and it would probably not have been made. However it reflects that we can overlook even the tiniest and simplest ones, if we keep on relying only on unit tests.

Drawbacks of the solution

- configuration of the library gets far way more problematic, when we use less common or a customized database. In that case you have to create generic container manually, which is based on a defined docker image (you can read about it here).

- long launch container duration, which may appear especially while using less common databases.

Summary – finally! (Thanks you made it here!)

We hope, we provided you with most comprehensive approach to integration test writing, so that our tests become reliable and that they actually are going to verify our code right before launching it in production-like environment. We believe that presenting to you, step by step, my line of work and gathered experience, will actually be of value to you and to your code writing. Remeber,Devapo is always here to help!

Check out other articles in the technology bites category

Discover tips on how to make better use of technology in projects